Dell Compellent is Dell’s flagship storage array which competes in the market with such rivals as EMC VNX and NetApp FAS. All these products have slightly different storage architectures. In this blog post I want to discuss what distinguishes Dell Compellent from the aforementioned arrays when it comes to multipathing and failover. This may help you make right decisions when designing and installing a solution based on Dell Compellent in your production environment.

Dell Compellent is Dell’s flagship storage array which competes in the market with such rivals as EMC VNX and NetApp FAS. All these products have slightly different storage architectures. In this blog post I want to discuss what distinguishes Dell Compellent from the aforementioned arrays when it comes to multipathing and failover. This may help you make right decisions when designing and installing a solution based on Dell Compellent in your production environment.

Compellent Array Architecture

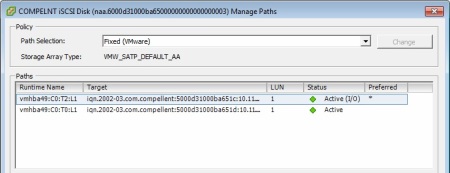

In one of my previous posts I showed how Compellent LUNs on vSphere ESXi hosts are claimed by VMW_SATP_DEFAULT_AA instead of VMW_SATP_ALUA SATP, which is the default for all ALUA arrays. This happens because Compellent is not actually an ALUA array and doesn’t have the tpgs_on option enabled. Let’s digress for a minute and talk about what the tpgs_on option actually is.

For a storage array to be claimed by VMW_SATP_ALUA it has to have the tpgs_on option enabled, as indicated by the corresponding SATP claim rule:

# esxcli storage nmp satp rule list

Name Transport Claim Options Description ------------------- --------- ------------- ----------------------------------- VMW_SATP_ALUA tpgs_on Any array with ALUA support

This is how Target Port Groups (TPG) are defined in section 5.8.2.1 Introduction to asymmetric logical unit access of SCSI Primary Commands – 3 (SPC-3) standard:

A target port group is defined as a set of target ports that are in the same target port asymmetric access state at all times. A target port group asymmetric access state is defined as the target port asymmetric access state common to the set of target ports in a target port group. The grouping of target ports is vendor specific.

This has to do with how ports on storage controllers are grouped. On an ALUA array even though a LUN can be accessed through either of the controllers, paths only to one of them (controller which owns the LUN) are Active Optimized (AO) and paths to the other controller (non-owner) are Active Non-Optimized (ANO).

Compellent does not present LUNs through the non-owning controller. You can easily see that if you go to the LUN properties. In this example we have four iSCSI ports connected (two per controller) on the Compellent side, but we can see only two paths, which are the paths from the owning controller.

If Compellent presents each particular LUN only through one controller, then how does it implement failover? Compellent uses a concept of fault domains and control ports to handle LUN failover between controllers.

Compellent Fault Domains

This is Dell’s definition of a Fault Domain:

Fault domains group front-end ports that are connected to the same Fibre Channel fabric or Ethernet network. Ports that belong to the same fault domain can fail over to each other because they have the same connectivity.

So depending on how you decided to go about your iSCSI network configuration you can have one iSCSI subnet / one fault domain / one control port or two iSCSI subnets / two fault domains / two control ports. Either of the designs work fine, this is really is just a matter of preference.

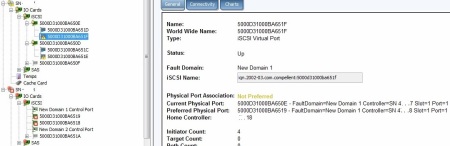

You can think of a Control Port as a Virtual IP (VIP) for the particular iSCSI subnet. When you’re setting up iSCSI connectivity to a Compellent, you specify Control Ports IPs in Dynamic Discovery section of the iSCSI adapter properties. Which then redirects the traffic to the actual controller IPs.

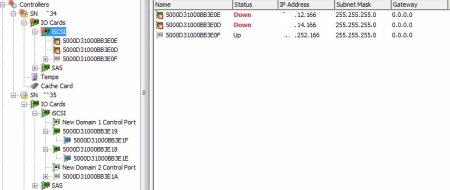

If you go to the Storage Center GUI you will see that Compellent also creates one virtual port for every iSCSI physical port. This is what’s called a Virtual Port Mode and is recommended instead of a Physical Port Mode, which is the default setting during the array initialization.

Failover scenarios

Now that we now what fault domains are, let’s talk about the different failover scenarios. Failover can happen on either a port level when you have a transceiver / cable failure or a controller level, when the whole controller goes down or is rebooted. Let’s discuss all of these scenarios and their variations one by one.

1. One Port Failed / One Fault Domain

If you use one iSCSI subnet and hence one fault domain, when you have a port failure, Compellent will move the failed port to the other port on the same controller within the same fault domain.

In this example, 5000D31000B48B0E and 5000D31000B48B0D are physical ports and 5000D31000B48B1D and 5000D31000B48B1C are the corresponding virtual ports on the first controller. Physical port 5000D31000B48B0E fails. Since both ports on the controller are in the same fault domain, controller moves virtual port 5000D31000B48B1D from its original physical port 5000D31000B48B0E to the physical port 5000D31000B48B0D, which still has connection to network. In the background Compellent uses iSCSI redirect command on the Control Port to move the traffic to the new virtual port location.

2. One Port Failed / Two Fault Domains

Two fault domains scenario is slightly different as now on each controller there’s only one port in each fault domain. If any of the ports were to fail, controller would not fail over the port. Port is failed over only within the same controller/domain. Since there’s no second port in the same fault domain, the virtual port stays down.

A distinction needs to be made between the physical and virtual ports here. Because from the physical perspective you lose one physical link in both One Fault Domain and Two Fault Domains scenarios. The only difference is, since in the latter case the virtual port is not moved, you’ll see one path down when you go to LUN properties on an ESXi host.

3. Two Ports Failed

This is the scenario which you have to be careful with. Compellent does not initiate a controller failover when all front-end ports on a controller fail. The end result – all LUNs owned by this controller become unavailable.

This is the price Compellent pays for not supporting ALUA. However, such scenario is very unlikely to happen in a properly designed solution. If you have two redundant network switches and controllers are cross-connected to both of them, if one switch fails you lose only one link per controller and all LUNs stay accessible through the remaining links/switch.

4. Controller Failed / Rebooted

If the whole controller fails the ports are failed over in a similar fashion. But now, instead of moving ports within the controller, ports are moved across controllers and LUNs come across with them. You can see how all virtual ports have been failed over from the second (failed) to the first (survived) controller:

Once the second controller gets back online, you will need to rebalance the ports or in other words move them back to the original controller. This doesn’t happen automatically. Compellent will either show you a pop up window or you can do that by going to System > Setup > Multi-Controller > Rebalance Local Ports.

Conclusion

Dell Compellent is not an ALUA storage array and falls into the category of Active/Passive arrays from the LUN access perspective. Under such architecture both controller can service I/O, but each particular LUN can be accessed only through one controller. This is different from the ALUA arrays, where LUN can be accessed from both controllers, but paths are active optimized on the owning controller and active non-optimized on the non-owning controller.

From the end user perspective it does not make much of a difference. As we’ve seen, Compellent can handle failover on both port and controller levels. The only exception is, Compellent doesn’t failover a controller if it loses all front-end connectivity, but this issue can be easily avoided by properly designing iSCSI network and making sure that both controllers are connected to two upstream switches in a redundant fashion.