Disclamer: this configuration is not validated by either VMware or Red Hat. Make sure it is applicable to your use case and thoroughly test before implementing in production.

Overview

If you landed on this page I trust you already know what Ansible is. It’s a great configuration management tool centred around using YAML to describe the desired state configuration of your various infrastructure components. This desired state is captured in what Ansible calls playbooks, which once written, can then be used in a repeatable way to deploy brand new components or enforce configuration on already deployed ones.

If you landed on this page I trust you already know what Ansible is. It’s a great configuration management tool centred around using YAML to describe the desired state configuration of your various infrastructure components. This desired state is captured in what Ansible calls playbooks, which once written, can then be used in a repeatable way to deploy brand new components or enforce configuration on already deployed ones.

Ansible can be installed and used from CLI, which is usually a good starting point. If you have multiple people using Ansible in your organization, you can also deploy AWX. It’s a free GUI add-on to Ansible, which makes managing concurrent user access to Ansible easier, by adding projects, schedules and credentials management. On top of that there is Ansible Tower. Ansible Tower is a paid version of AWX and gives you additional enterprise features and services like clustering, product support, validated upgrade paths, etc. In this article we will be focusing on Ansible Tower version of the product.

Also worth mentioning that this configuration will be based on Ansible Tower cluster feature, which lets you run all nodes as active/active. Prior to version 3.1 it was called redundancy and worked only in active/passive mode. Redundancy feature is deprecated and is outside the scope of this blog post.

Topology

Deploying multiple Ansible Tower nodes in a cluster already gives you redundancy. If one of the nodes fails you can connect to another node, by just changing your browser URL. The benefit of having a load balancer is that you have one URL you can hit and if a node goes down, such situation is handled by load balancer automatically.

In this example we will be deploying a VMware NSX load-balancer in the following topology:

Configuration

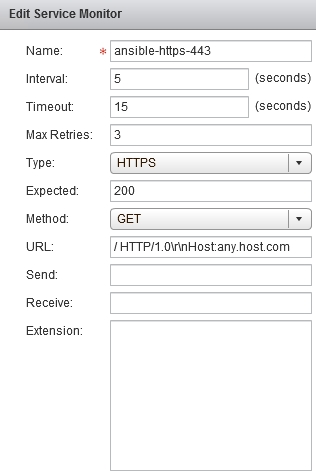

Deploying an NSX load-balancer for HTTPS port 443 is simple, you can find numerous examples of how to create application profiles, monitors, pools and VIPs in official VMware documentation or out on the Internet. But with Ansible there’s one catch. If you try to use the default HTTPS monitor that NSX load balancer comes with, you will find HTTP 400 code in Ansible nginx logs:

10.20.30.40 - - [20/Jan/2020:04:50:19 +0000] "GET / HTTP/1.0" 400 3786 "-" "-" "-" 10.20.30.40 - - [20/Jan/2020:04:50:24 +0000] "GET / HTTP/1.0" 400 3786 "-" "-" "-" 10.20.30.40 - - [20/Jan/2020:04:50:29 +0000] "GET / HTTP/1.0" 400 3786 "-" "-" "-"

And an error in NSX load balancer health check:

As it turns out, when you make a HTTP request to Ansible Tower, specifying HTTP “Host” header is a requirement. Host header simply contains the hostname of the server you’re making a request to. Browsers add this header automatically, that’s why you’re not going to see any errors, when accessing Ansible Tower Using Firefox or Chrome. But NSX doesn’t add this header to the monitor checks by default, which makes Ansible Tower upset.

Here is the trick you need to do to make Tower happy:

Now nginx logs show success code 200:

10.20.30.40 - - [21/Jan/2020:22:54:42 +0000] "GET / HTTP/1.0" 200 11337 "-" "-" "-" 10.20.30.40 - - [21/Jan/2020:22:54:47 +0000] "GET / HTTP/1.0" 200 11337 "-" "-" "-" 10.20.30.40 - - [21/Jan/2020:22:54:52 +0000] "GET / HTTP/1.0" 200 11337 "-" "-" "-"

Load balancer health check is successful:

And pool members are up and reachable:

Note: technically the host header should contain the hostname of the Tower node we’re making a health check on. But since NSX monitor is configured per pool and not per pool member, we have to use a fake hostname “any.host.com” as a workaround. When I was testing it, Tower didn’t complain.

Reference

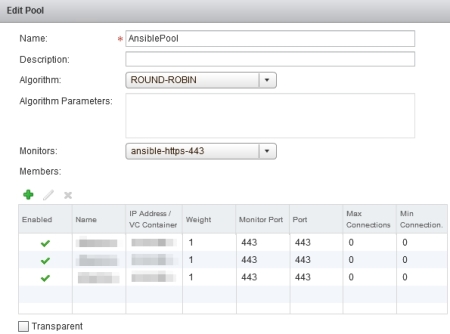

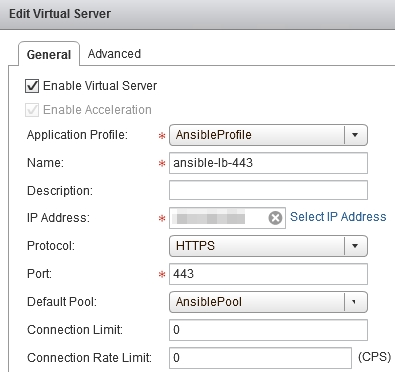

Even though I said that the rest of the load-balancer configuration is standard, I still think having screenshots for reference is helpful if you need to validate configuration. So find the full list of settings below.

Screenshot 1: Application Profile

Screenshot 2: Service Monitor

Screenshot 3: Pool

Screenshot 4: Virtual Server